Most creative AI tools today work like vending machines: type in what you want, press a button, and something drops into your lap. Useful, yes — but real creative work doesn’t happen in vending machines. It happens in studios.

A studio isn’t just a place; it’s a habitat. If I ever had a chance to visit David Hockney in LA, I’d see paintings in progress next to iPads, reference photos clipped beside oil palettes, analog and digital tools blending into each other. The studio is a living organism on its own. It’s multimodal by default, built for iteration, accidents, and retrieval of half-forgotten fragments.

Interfaces should be able to capture that.

Technically, we’re ready for this. Practically, we’re not there yet. There’s likely a ton of creative intelligence locked behind interfaces that are either overcomplicated or limiting by design (sometimes both…). Discovering new model capabilities in these frameworks is an art in itself.

Not long ago, creators discovered Veo 3’s ability to handle in-frame doodles and annotations in frame-to-video mode. You can even upload collage-like images. With the right prompts and *only a few edits (😵💫)*, you have almost infinite directive control capabilities.

The core product experience is still framed around a static upload field and a unified prompt bar that resets after each run. Every generation feels like a disconnected transaction: you hand over files or text, the model disappears behind the curtain, and a few seconds later, pixels drop out → vending machine vibes. Imagine instead replacing the upload slot with a drawable canvas: a space where you can mark, sketch, and annotate directly. Imagine those sketches, text notes, and brushstrokes all living in the same place, speaking the same prompting language.

O God, I could be bounded in a nutshell and count myself a King of infinite space. - Hamlet (II, 2)The beauty of this lies in its adaptability and scalability beyond sketches, arrows or text. You can go much further, eventually turning the 2D canvas into an explorable stage where you can move objects and people around — comparable to building a stage.

Another problem yet to be solved is what I call an infinite creation flow. Platforms like Runway or Flora seem pretty close with a fairly media-agnostic focus on creation over consumption1 but so much else about their UI breaks my personal flow as a creator.

Users want to iterate extensively on a specific idea but with different features, prompts and assets. They want to do this in a distinct environment, maybe something resembling a chat or story board. Creation is, ultimately, conversation and interaction — it should feel a bit more like that tbh.

The solution would be persistent generation sessions, so the model remembers your moves and momentum, and can build on that. That shift would turn each run from an API call retrieving pixels into something closer to a painting session: iterative and cumulative.

An interface as a studio should:

Accept multimodal inputs: image, video, text, sound, gesture.

Make iteration and remixing as intuitive as drawing a line.

Offer interface boundaries not as friction, but as form-giving constraints.

Allow chaos to coexist with control → the creative kind of mess needed to give birth to a star.

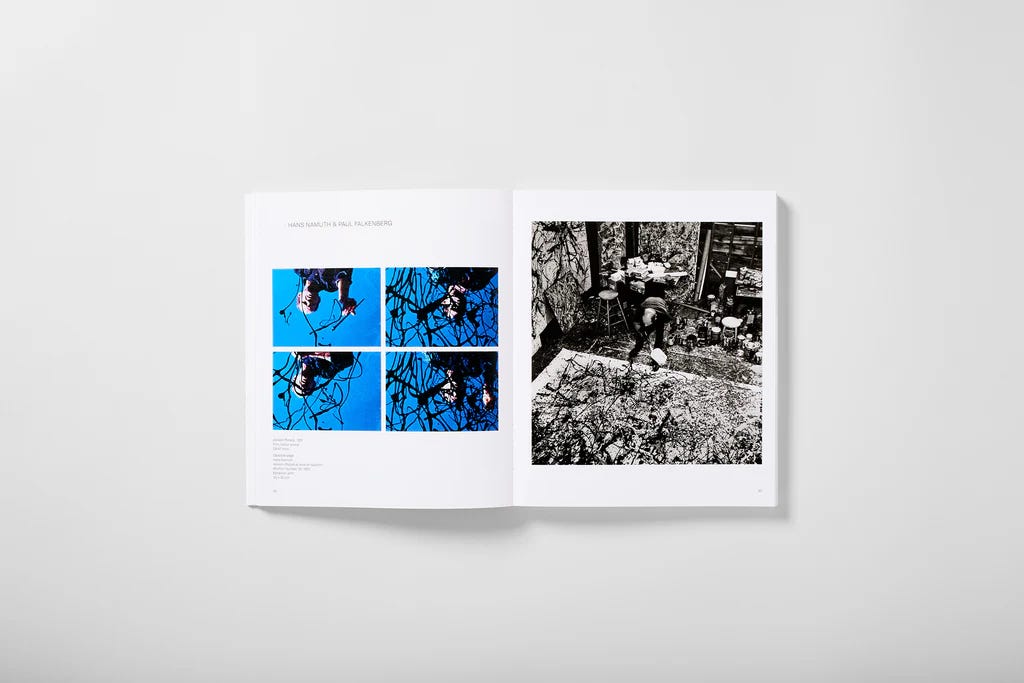

There has always been fascination around artists’ studios, from Vermeer’s dimly lit rooms to Warhol’s silver-painted factory. Now, more than ever, we also need virtual spaces where tools and works-in-progress exist in visible conversation. AI tools can bring that back, not by mimicking the look of a studio, but by reproducing its conditions for flow, play, and discovery.

Real time generation of videos and environments advances this. Creation will feel more like moving wet paint than retrieving a finished print. In this ‘wet paint’ state, every gesture matters and change is instantaneous. It’s a shift from treating AI as a black box that delivers results, to treating it as a surface you’re actively shaping by interacting with it (or even within). An experience that’s tactile and responsive.

The next generation of AI tools shouldn’t just be about lower latency and more control over the output. They should be about inhabiting a place where all your tools, materials, and unfinished ideas are within reach, and where the boundaries between imagining and visualizing collapse.

Because vending machines give you products. Studios give you stories.

Thanks to Jerrod Lew, August Kamp, and Elon Rutberg who inspired me to write this.

This aspect is interesting artist studios, like most generative media platforms today, have always blurred the lines between private production and public display. Here’s a book on that.